Welcome to issue #02 of the Cercle IA newsletter . Thank you to the first 631 subscribers who have placed their trust in me.

This week, a lawyer with 40 years’ experience asked me this question: “Do I (really) need to get into AI?”

The word “really” is revealing. It expresses both weariness in the face of constant injunctions to innovate and legitimate skepticism: beyond the media hype, what real gain can be made from AI with an already established career?

I couldn’t settle for a simple “yes”. I answered with a question: “How much longer do you want to stay active? We’ll meet again at the end of the summer to discuss it.

But this question has never left me. And the answer I’m going to share with you is inspired by a maxim ofHillel the Elder, a great sage of Judaism from the 1st century BC:

“If I’m not for myself, who will be?

If I am only for myself, what am I?

And if not now, when?”

In this issue :

- Getting to grips with AI: three questions applied to professional AI

- The trap to avoid: An exclusive study; MIT/Wharton/Harvard that reveals why letting juniors train seniors in AI can lead to failure;

- The AI tool to test: An indispensable tool for avoiding “false information” from AI.

Finally, I’m not going to mention the hot topic of the week, namely the release of GPT-5, Open AI’s long-awaited new model. Comments are pouring in at the moment. I’d rather share with you my own experience, with a bit more hindsight.

The wise man’s first question: “If I am not for myself, who will be?”

Or why no one can learn AI for you

Ancient wisdom applied to modern AI

Hillel the Elder asked this question 2,000 years ago. Applied to AI, it becomes : no one is going to adopt AI for you.

Not your partner, not your assistant, not the trainee “who knows about computers”.

Here’s a fact to consider: AI is transforming the very nature of intellectual work. Delegating this transformation means accepting to become a spectator of your own profession.

The three lessons that you alone must do:

- Identify where AI excels in your business Without sufficient knowledge, you’ll be operating in a blind spot and won’t be able to draw the right conclusions;

- Recognize the limits of AI to avoid costly mistakes The Harvard-BCG study shared in the first edition proves it: when you go beyond the capabilities of AI, performance drops by 19 points.

- Develop your own operating reflexes Skill and intuition are not transmitted. They are developed through practice.

The classic mistake? Waiting for “someone else” to test, build the team and then explain. Meanwhile, your competitors are months ahead of you.

The wise man’s second question: “If I am only for myself, what am I?”

Or why AI’s solitary approach sabotages collective performance

Hillel’s second question questions our collective responsibility. L ndividual adoption of AI is necessary, but dangerously insufficient.

Using ChatGPT on the sly? You’re choosing temporary personal gain over lasting organizational performance.

The 3 levels of AI impact

Hillel’s wisdom teaches us to think beyond ourselves. AI generates ripples that reach wider and wider levels. We need to think in concentric circles: team, customer, company.

First level: Your team

The real gains lie in :

- Defining and disseminating shared best practices

- Creating hybrid human-IA processes

- Sharing learning AND failures

Case in point: An AI-savvy associate can revolutionize contract drafting. But if he keeps his prompt secrets, the firm remains vulnerable. What happens if it leaves? How do you train new employees?

Second level: your customers

Make no mistake: your customers are also experimenting with ChatGPT. They’re wondering whether they should use you or AI for certain needs.

Sooner or later, they will judge whether your technological choices really serve their interests, or only yours.

The key question: “How do my AI choices really serve my customers?” rather than “Should I adopt AI?”.

Third level: your profession

Every professional who masters AI is helping to shape the future of his or her sector:

- Collective education : You become an example for your peers

- Responsible innovation : Your use helps redefine standards and possibilities in your profession

- Just transition AI is not neutral. The way you act can contribute to bridging inequalities or widening them.

Cross-functional issues

Certain questions cross all circles:

- How can we develop the jobs of employees whose tasks are automated?

- How can we ensure that AI reinforces human expertise rather than replacing it?

- What is the environmental impact of your use of AI?

The “selfish” vs. “systemic” approach: To limit oneself to the first circle is to miss the transformative potential of AI.

Thinking in concentric circles means helping to build a professional future that is both more efficient and more humane.

The wise man’s third question: “And if not now, when?”

Or the implacable calculation of lost time

Hillel’s final question asks the right time to act. Hillel teaches us that the optimal moment is not “later”. Applied to AI: the time is now.

Unlike previous technological revolutions, AI does not require colossal investments on the part of users. For €20/month, you get access to ChatGPT Plus. For €200/month, access to the most advanced tools.

The real cost? Learning time. And you’ll never make up for that time.

The adoption curve: we’re still in the early adopter phase. This window is closing. Soon, mastering AI will be a prerequisite, not an advantage.

For our lawyer with 40 years’ experience: in a few years’ time, clients will be comparing his efficiency with that of colleagues with legal experience and AI skills. Forty years’ expertise will remain an asset, but will no longer compensate for a 40% gap in productivity.

The expertise trap: The more expert you are, the more you have the illusion that your advantage is sustainable. But AI doesn’t replace expertise, it multiplies it. As a result, your less experienced but AI-trained competitors will quickly catch up with your lead.

The optimal moment according to ancient wisdom

Hillel teaches us that the optimal time is not “later”. Applied to AI: it’s now.

Not because it’s urgent, but because it’s optimal:

- You can still learn with peace of mind

- You can experiment without customer pressure

- You can influence the standards of your profession

- You can build your team gradually

In 18 months’ time, this serenity will be gone.

The real question inspired by Hillel

The wise man’s three questions transform our lawyer’s dilemma. It’s no longer “Do I really need AI? “How am I going to integrate AI to reinforce who I already am and who I want to become?”.

That seems to me a better question. It’s up to you to answer it.

MIT/Wharton/Harvard study: when juniors train seniors in AI, the firm risks learning failure

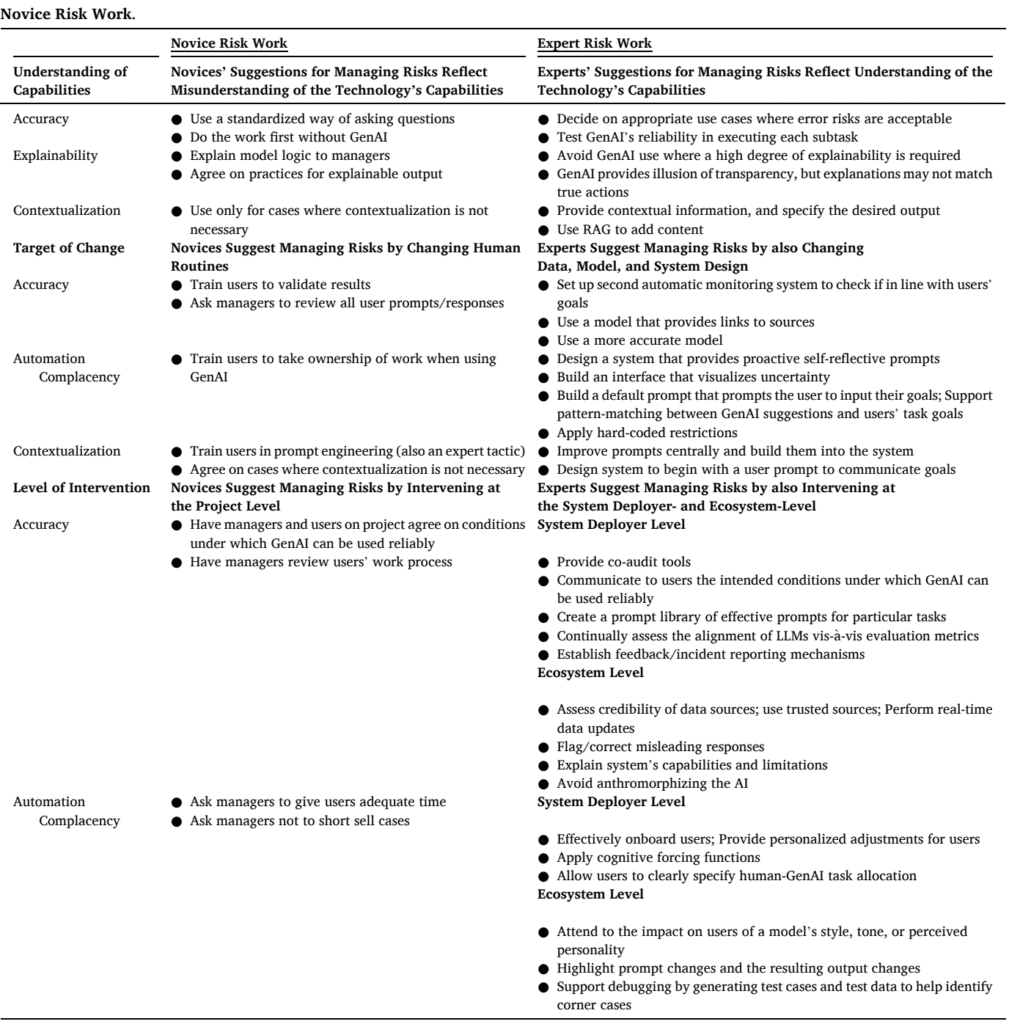

Researchers from MIT, Wharton and Harvard have published an article entitled Novice risk work: How juniors coaching seniors on emerging technologies such as generative AI can lead to learning failures.

This qualitative study involved 78 junior consultants from the Boston Consulting Group (BCG).

Objective: To understand how juniors, often perceived as the “natural trainers” of new technologies, manage the risks associated with generative AI (GPT-4) and what the implications are for an organization.

The researchers interviewed these consultants immediately after their first use of GPT-4 in an experimental setting. They then compared the risk management strategies proposed by these “novices” with those recommended by AI experts at the same time.

The reverse coaching paradox

Traditionally, juniors are more comfortable with new technologies and end up training their elders. The consultants in the study confirmed this: they expected to have to teach their managers how to use AI.

But the study reveals an unexpected obstacle. The main obstacle is not resistance to change or the ego of senior citizens, but rather the new risks AI’s impact on the quality and reliability of work. The juniors identified four major fears among their managers:

- Risk of inaccuracy: AI can produce false or “hallucinated” information.

- Risk of opacity: AI is a “black box” and it becomes impossible to explain the reasoning behind a result.

- Risk of decontextualization: The solution produced may be generic and not take into account the specific context of a customer or case.

- Risk of complacency: Users may put blind trust in the tool without checking or exercising critical thinking.

Novice Risk Work: the source of failure

The key finding of this study is what the researchers call “Novice Risk Work”. “Novice Risk Worka phenomenon where juniors, as AI novices, propose counterproductive solutions to manage these risks.

Their solutions are well-intentioned but dangerous, because they ignore the profound nature of technology.

Contrasting performance of risk management strategies :

Why this study is important

This study reveals several lessons for any organization integrating AI:

- Junior coaching is a trap: Relying on younger people to train an organization in AI can lead to “learning failures”, as their intuitions introduce risks.

- Solutions must be systemic: Individual corrective measures (better training, better checking) are insufficient. True risk management requires organization-wide solutions: libraries of promptvalidated tools and defined use cases.

- The key skill is knowing AI’s limits: More than knowing how to use AI, it’s crucial to learn how to identify situations where it fails, and where human judgment must take precedence to avoid critical errors.

- AI governance is a management responsibility: Defining a risk management framework cannot be delegated. It is a strategic issue that involves management in guaranteeing the safety and performance of the entire organization.

The tool to test: Perplexity

Using AI is a lot like cooking. You can have a beautiful pan Le Creuset or a Thermomix if you don’t have fresh, quality ingredients, you won’t get anything good to eat.

To get a good result with AI, you need quality data. The problem with tools like ChatGPT is that it’s difficult to distinguish between accurate information and that which has only the appearance of credibility.

The less you know about a field, the greater the risk of using “spoiled” information.

To avoid this risk, I recommend everyone to use Perplexity.

Increasingly popular, Perplexity combines the power of a conversational AI like ChatGPT with the reliability of a traditional search engine like Google.

This hybrid tool stands out for its ability to provide fast, accurate and systematically sourced answers.

When you ask him a question, Perplexity performs advanced research on the Internet in real time, gathering information from multiple sources. It then synthesizes this information into a clear, concise summary.

Perplexity’s key assets

- Intelligent search and in-depth exploration In addition to answering your questions, Perplexity automatically suggests related questions that allow you to dig deeper into a subject from every angle. This discovery feature transforms a simple search into a true thematic exploration, revealing aspects you wouldn’t have thought of initially.

- Performance and reliability Our solutions are based on the following principles: near-instantaneous responses with systematic quotations from verifiable sources, intelligent synthesis of information from hundreds of millions of sources, and real-time access to information.

Free vs. Pro version

- Free version Full access to Perplexity’s basic features, perfect for personal use or occasional needs. Web and mobile interface available with related question suggestions to explore your topics.

- Pro version Advanced features for intensive professional use, including a choice of several AI models (GPT-4o, Claude, Mistral…)..) and extended access to Deep Research and Perplexity Labs.

Turning every query into a starting point for further exploration, Perplexity doesn’t just answer questions: it helps you discover and deepen your topics of interest in an intuitive and structured way, right from the free version.

Before leaving us…

Did you enjoy this second edition? Two simple actions to make sure you don’t miss a thing:

✅ Subscribe to receive future analyses

✅ Share this newsletter with a contact interested in AI

Don’t forget to put your knowledge into practice.

P.S.: You can join the IA Circle now and take one of our upcoming training courses:

- 🗓️ August 21-22, 2025: AI Summer School for consulting professionals 1.5 intensive days to master the fundamentals of artificial intelligence and apply them to your consulting assignments.

- 🗓️ August 28-29, 2025: AI Summer School for Lawyers Get ready for the new legal year with 1.5 intensive days to master the codes of AI applied to law.

- 🗓️ September 2025: Launch of the 2nd cohort of Cercle IA bootcamp (the waiting list is open).

This newsletter may contain affiliate links to tools that I personally test and approve. Your purchases via these links support the editorial work (more than a day per newsletter) at no extra cost to you.